Unsupervised Object Discovery and Co-Localization

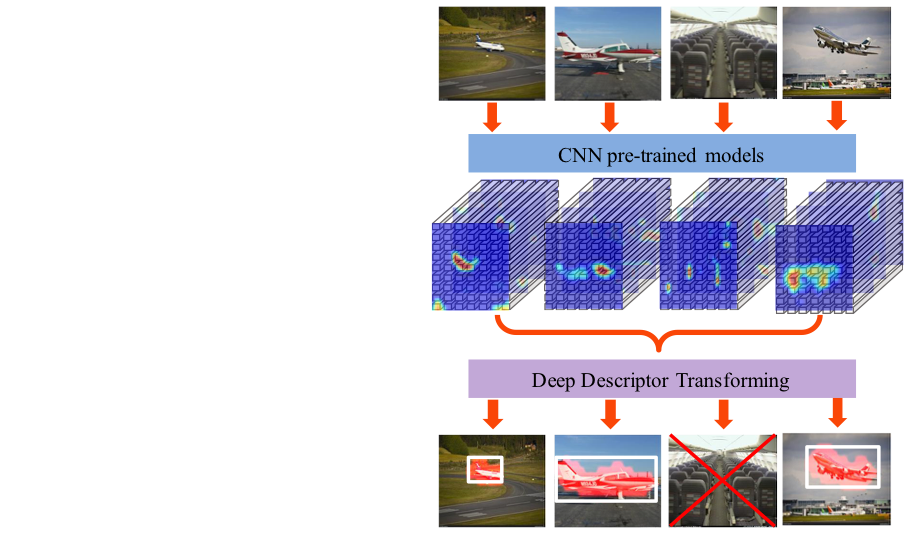

by Deep Descriptor Transformation

|

Authors

Abstract

Reusable model design becomes desirable with the rapid expansion of computer vision and pattern recognition applications. In this paper, we focus on the reusability of pre-trained deep convolutional models. Specifically, different from treating pre-trained models as feature extractors, we reveal more treasures beneath convolutional layers, i.e., the convolutional activations could act as a detector for the common object in the object co-localization problem. We propose a simple yet effective method, termed Deep Descriptor Transformation (DDT), for evaluating the correlations of descriptors and then obtaining the category-consistent regions, which can accurately locate the common object in a set of unlabeled images, i.e., object co-localization. Empirical studies validate the effectiveness of the proposed DDT method. On benchmark object co-localization datasets, DDT consistently outperforms existing state-of-the-art methods by a large margin. Moreover, DDT also demonstrates good generalization ability for unseen categories and robustness for dealing with noisy data. Beyond those, DDT can be also employed for harvesting web images into valid external data sources for improving performance of both image recognition and object detection.

Downloads

Code

|

Dataset

|

Related Papers

X.-S. Wei, C.-L. Zhang, J. Wu, C. Shen, and Z.-H. Zhou. Unsupervised Object Discovery and Co-Localization by Deep Descriptor Transformation. Pattern Recognition, in press.

X.-S. Wei, C.-L. Zhang, Y. Li, C.-W. Xie, J. Wu, C. Shen, and Z.-H. Zhou. Deep Descriptor Transforming for Image Co-Localization. In Proceedings of International Joint Conference on Artificial Intelligence (IJCAI’17), Melbourne, Australia, 2017, pp. 3048-3054.